Early Days

One of my early interests in programming was 3d computer graphics. The challenges then were much different than they are today. Everything had to be done by hand - there was no dedicated hardware, there were no engines. Every pixel seen on screen was deliberately placed by the game programmer (or just “programmer”. The notion of separate “game” and “graphics” programmers didn’t really exist yet). You had to implement your own matrix libraries and transform stack, line drawing routines, edge clipping, scan-line rendering, polygon culling, scene ordering, and so on. And this is apart from all the peripheral things, such as image and model file loading, key and joystick input handling, and memory management, that you also had to worry about. Layer on the fact that learning resources were incredibly sparse compared to today, and it made 3D graphics programming in that era a challenge of a different nature.

Which is not to say graphics programming is easier today - in fact, it’s extremely challenging, just in new ways. But the something-from-nothing, do-it-yourself nature is definitely gone, and as it slowly departed over time, so did my general interest in 3D graphics. Once you could send transform, color, and texture specifications to 3d dedicated hardware, thereby offloading all the fun calculations, 3D graphics felt solved and uninteresting. As a result I ended up writing software-only renderers long after it was a sensible thing to do, and then eventually, I stopped graphics programming altogether.

In some ways I regret not sticking with 3D, as things did become interesting again once hardware moved beyond fixed-function pipelines on to programmable hardware with vertex and fragment shaders and the like. But I still love the path I took on my 3d graphics knowledge journey - even if I did step away from it. There is a certain trial in having to really understand the 3d math and all the rest, that I value in my own education, and frankly I don’t see in many newer game programmers. Which is not a fault of, or even a problem with, the programmers really, it just says something about what game programming has become.

Anyway, I give all this context to make it easier to appreciate the early work I’m showing here. All of this stuff is laughably trivial now (and some of it trivial even at the time, but for my stubborn insistence to do it all by hand), but back then they felt like real achievements.

And so without further ado, here is a quick review of various early 3D studies of my game programming career.

“Rotate M”

When I first started 3d programming, it was a bit of a competition between me and my good friend and programming buddy, Mark. We wanted to see who could get something, anything, resembling a 3D polygon, on screen first. Well, he won. But that kicked off a series of oneupmanship work that he and I engaged in as we each tried to outdo the other. This “Rotate M” program was my response to his having shown me a rotating smiley face that totally blew me away.

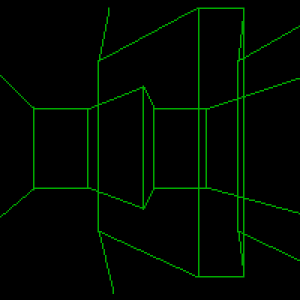

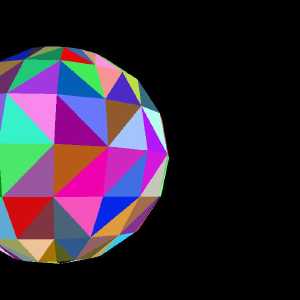

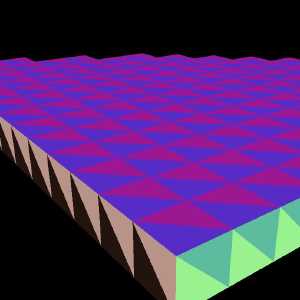

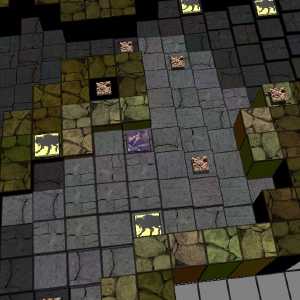

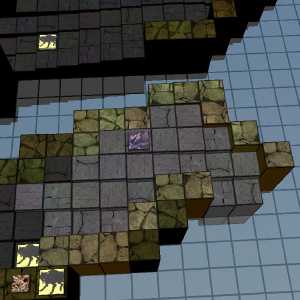

Texture Mapping

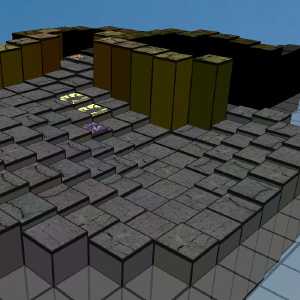

Not too long after getting wireframe graphics working, we moved on to filled polygon techniques. Above are screenshots from our first fill and texture rasterizer. It’s been so long, I don’t remember if I wrote this or Mark did, or if we worked on it together. In either case, we learned the techniques together, so I suppose it’s all the same. The texture mapping, is affine only - I was not smart enough then to understand and implement the perspective correct version. Too bad I have no video of this demo, but if I did, it would show all the classic texture warping you get with affine rendering. Then again, anyone familiar with PlayStation 1 graphics has seen these artifacts aplenty.

Fast Forward

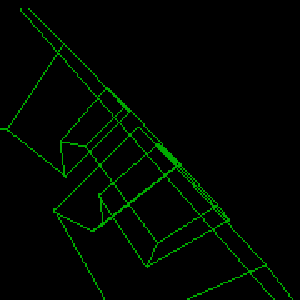

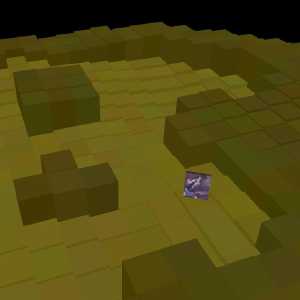

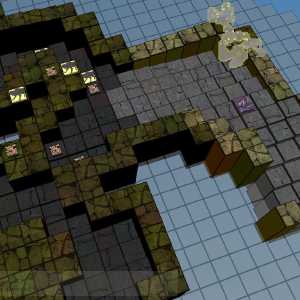

Those early 3d studies stopped once Mark and I went off to college. In college I worked on a few 3D projects, but not many, and nothing really worth showing here. But after college, I began to regain interest in software rendering once again. In particular, I was eager to see what I could do now that computers were so much faster. Of course, higher resolutions were possible. Also, I could afford to texture every polygon (wow! :b).

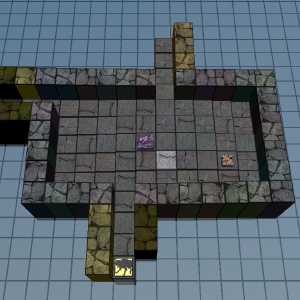

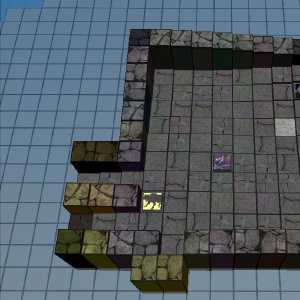

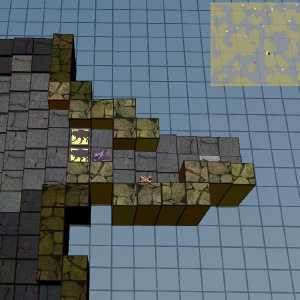

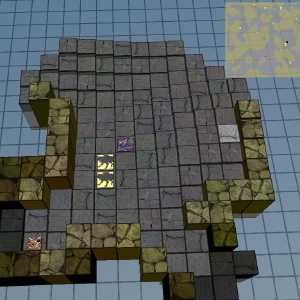

Above is an example of a dungeon crawler roguelike I was working on. By this time, I understood the fundamentals much better, and the 3D code was, in my opinion, professional grade. It’s just…I still refused to let the 3D hardware help me there. So, this is light years behind what was actually possible in the day. Still, I’m rather fond of it.

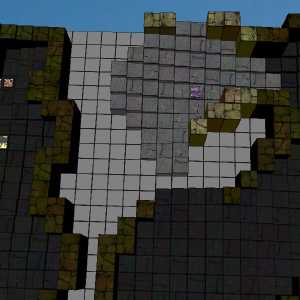

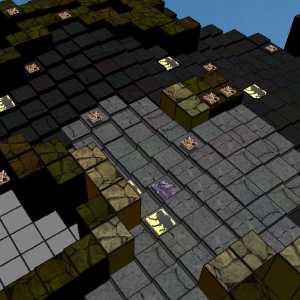

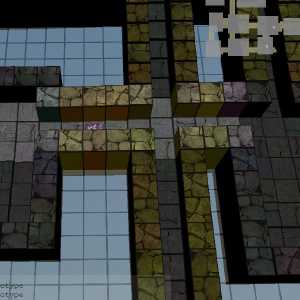

Stuck in My Ways

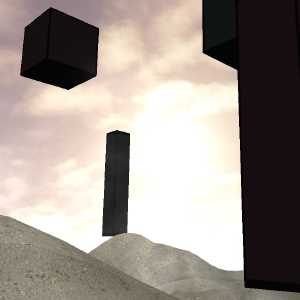

This project was something I submitted as a demo to a prospective employer. No surprises, I didn’t get the job! Haha. From a home-grown tech perspective, I think it reflects kindly enough on my skill, except that you have to know much more about what it is (and isn’t) to fully appreciate it. If you’re used to DirectX driven engine demos, or if you’ve never coded from first principles yourself, it more than fails to impress, it’s downright repellent. Sadly, I was stuck in my old ways, too much of a doofus to see that then.

Anyway, what I personally liked about this demo was the skydome implementation. Skydomes are as basic as they get, but it was a fun exercise to procedurally generate the dome geometry and its uv-texture coordinates, and then see it in game and working to such convincing effect. Simple as it is, I think it was really quite neat. But perhaps you had to be there. I also liked the camera following routine, which has the camera following a spline that’s generated in real time as the character moves around.